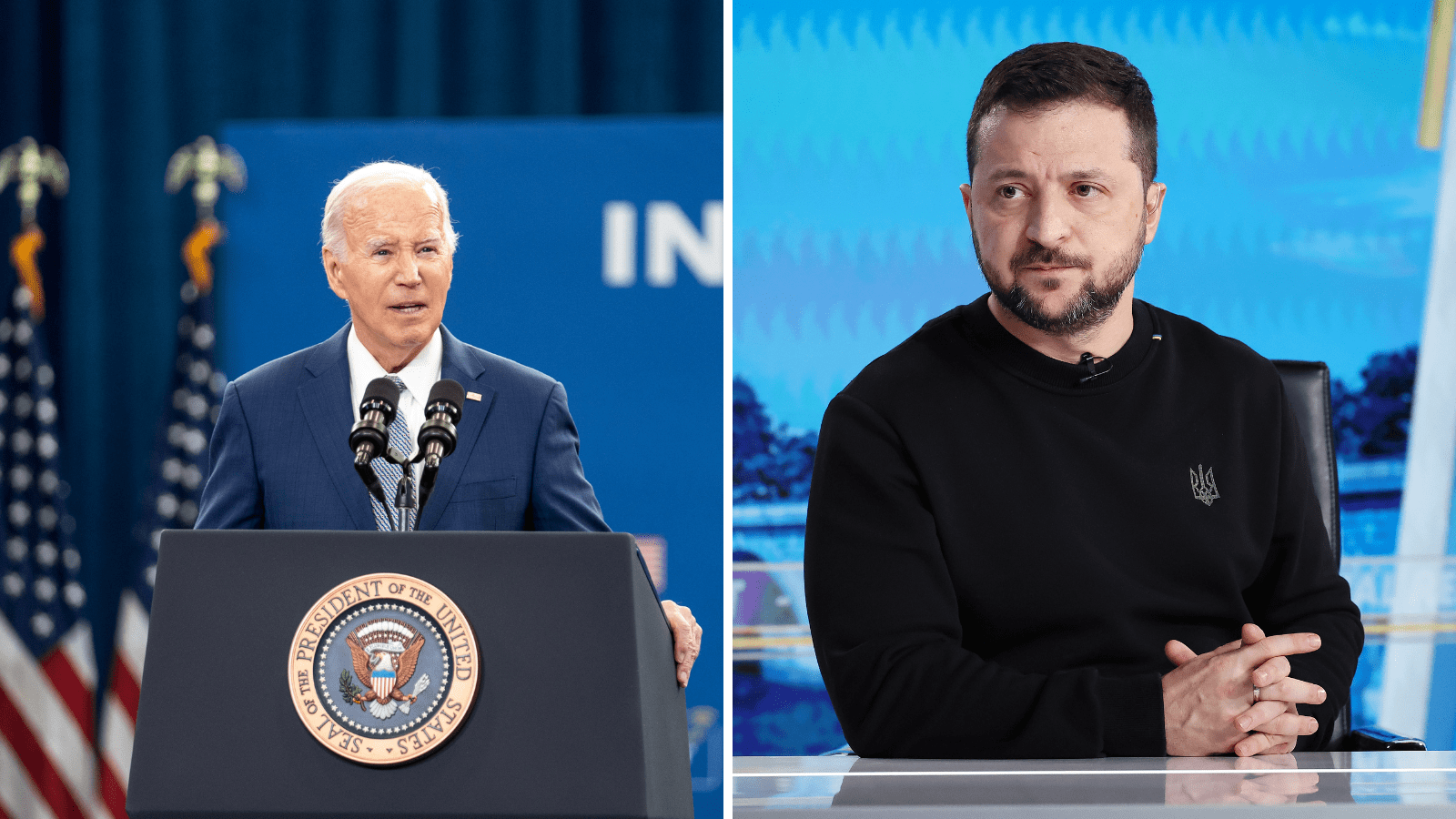

Opinion: Pundits have long ago called what seems like a foregone conclusion – that the upcoming 2024 American general election will once again be a matchup between the 45th President Donald Trump and 46th President Joe Biden. And people are not happy with the same old – with about two thirds of Americans ‘tired of seeing the same candidates’ as demonstrated by an early January Reuters/Ipsos poll.

It’s no wonder – their last matchup in 2020 led to political unrest, raucous partisan squabbling and ultimately a deadly storming of the Capital that demonstrated the vulnerability of the revered American political system to extremism.

Yet if you look closer this election looks to have at least one major difference – that in 4 years, the makeup of online social media campaigns has changed.

AI systems that were the domain of fantasy in 2020 are everywhere and make no mistake – they are and will be used to manipulate and aggravate, driving misinformed voters to and away from the polls in the process.

Earlier this year thousands of New Hampshire primary voters received an automated call from a ‘Joe Biden’ sounding voice telling them not to head to the polls. Ads run by Republicans have used AI-generated images of an overrun America that has fallen into disrepair following Democrat rule. Trump AI rap songs have topped iTunes.

And perhaps most relevant given her recent tour in Australia, photos of Taylor Swift endorsing Donald Trump went viral, gaining millions of views across social media.

This is not just an American issue – as the Brookings Institute points out, in 2024, a record number of countries will hold elections – they are home to more than 41 per cent of the world’s population and 42 per cent of global GDP.

Political endorsements, advertising, phone calls and messages can no longer be trusted – for each has been tainted by AI systems that can easily replicate them to deceive.

Tech companies have caught on to the threat. Meta intends to label all AI generated images posted on Facebook, Instagram and Threads.

OpenAI has announced new policies to insert labels in content created using its platform – though even they admit they are readily removable.

Related

Election officials are quick to be optimistic. They point to past attempts to disrupt votes and suggest that they will continue to stay ahead of the curve.

Yet it is highly doubtful any are fully aware or prepared to tackle the combined might of multi-sensory lies (visual and voice) and virality on social media of shocking material that gives AI content a unique ability to be seen by millions of eligible voters in the next few months.

Here’s why we must worry.

Truth is the core tenet of our civil society. Many have sought to mislead in the past and have been eventually caught out, but some have always slipped through the cracks and influenced some votes – no system is perfect.

Now however, the system is leaning towards critically broken.

Our citizens cannot be expected to follow up on every piece of information they see online.

If they see an elected official relaying a falsified message that perturbs them, how are they meant to then go back days or months later to verify that what their eyes just showed them was indeed real?

More worryingly, how will we combat the inevitable denial of real videos from the past as ‘simply deepfakes’ that are designed as a political hit job?

America is often seen as a bell-weather for the rest of the Western world. Late this year or early next year Australia holds its first ‘AI powered’ federal election.

We are certainly not as extreme as America in our political system and yet there is still emotion ripe for manipulation by hostile actors on social media.

Australia can no longer lag behind the world technologically as it becomes an increasingly attractive target.

Cyber-attacks crippled and stole data from millions in the last few years – and now hostile actors both foreign and domestic will act to mislead and apply their own influence on our elections with a community more receptive to getting their news from social media platforms that have had questions raised about their bias and foreign government controls.

So what is our solution?

On the 14th of February, leading artificial intelligence companies announced they are planning to sign an agreement committing to developing tools to identify, label and control AI-generated content that aims to deceive voters. This is only the start to our solution.

We for the first time will fight against a misinformation wave that can trick every single one of our senses. Companies like Photoshop have put out messaging about controlling AI false content but even their website stock images include fake images of the war in Gaza.

It means the responsibility is a three-pronged attack.

1. AI companies will need to take responsibility for labelling their AI produced digital content and regulating production of election or politically related content. They will need to put out active education on how voters can identify false AI content. They will also need to have higher verification standards for those assessed to be producing election content using AI tools.

2. Governments will need to legislate harsh punishment for the use of AI to deceive in line with other election interference laws and build robust cybersecurity election teams to prevent foreign governments from acting outside of domestic regulation.

3. Voters will have to become more active politically – with community teams such as those seen on ‘Community Notes’ on the X app working to debunk and tag false information before it reaches a critical mass of potential voters. If there is one benefit of AI’s rise, it will be that voters will need to be better informed to participate effectively in our political processes.

This is an impactful year for the world. Wars have broken out globally. Economies are continuing to rein in inflation. Political extremism is on the rise and foreign powers are increasingly flexing their international digital influence to meld their own agendas.

One of 100 issues can be manipulated – climate change, migration and border security, the Israel-Palestine conflict – to change a vote.

Understanding this core fact will allow us to build an AI framework that stays just ahead of the technology and allow us to shape the next decade of truth-based democracy.

Look back on the week that was with hand-picked articles from Australia and around the world. Sign up to the Forbes Australia newsletter here.