Former Google exec turned happiness guru, Mo Gawdat, says in order for AI to guide us to better times, the human race has to first navigate a decade or two of misery with the potential to destroy us all. And we’re already living it.

Ex-Google X executive Mo Gawdat, who will be appearing at SXSW Sydney in October, found himself in the unexpected position of becoming an expert on happiness after the death of his 21-year-old son, Ali, during a 2014 appendectomy gone wrong.

He wrote a book called Solve For Happy which sold 2 million copies [according to Google’s AI]. Then three years later, he “got a very unusual download, sitting in silence, about artificial intelligence.”

It prompted him to quit his high-paying job where he got to work on Google’s most out-there moonshot ideas, like Project Loon attempting to get the Internet to every square inch of the planet via high-altitude balloons, Project Makani which used high-altitude kites to generate electricity, and Project Foghorn which created gasoline from seawater but couldn’t make it cost competitive.

Mo Gawdat spoke with Forbes Australia about AI, happiness and dystopia

Is AI going to make the human race happy?

I believe so. I believe that humans will not let happiness go. I think there are quite a few myths that people need to demystify. One is people think that AI will never be smarter than humans and that Artificial General Intelligence [AGI] and super intelligence will not happen. AGI has already happened. If you define AGI as AI becoming better than humans at any task you can assign to humans. AI is better than me at every task that I can do. It’s a better author, it’s a better physicist, it’s a better mathematician, it’s a better researcher. So my AGI has already happened.

Most people fear the time when AI is gonna be smarter than humans because they expect AI to act like humans. And that’s very dangerous. Humans are evil, unfortunately, frequently. And so people talk about that existential risk of a rogue AI destroying all of us. Elon Musk puts it at 10, 15 percent. These are the odds of Russian roulette.

But I’d say this is a later problem that we have to worry about only if we can go through the next phase. And the next phase, in my mind, is not going to make us happy at all. It will be the era of bad actors using AI for their own benefit against the benefit of humanity.

And so, I predict a dystopia that lasts 10 to 15 years that has already started. We’ve seen it in the wars around the world in 2024, for example, where AI has been used to kill. And it’s going to deploy the biggest part of its resources, interest and budget into feeding the hunger for power and the greed of the capitalists at the top.

“The disorder that we’re about to see is not the result of AI. It’s the result of the evil that men will do. The salvation of humanity is because AI will say this is stupid. Killing people is stupid.”

Mo Gawdat

While AI is a force with no polarity, if you direct it to do good, we’ll get a utopia. If you direct it to evil, then it becomes a dystopia. Sadly, because of the current systems that humanity has lived for the past 150 years, we’re going to magnify our perpetual war, we’re going to magnify our oppression against freedom, and that’s going to cause a lot of pain for humanity.

When people ask me how I talk about it so calmly, I say it’s like a physician telling a patient that they have a late-stage diagnosis of a disease. A late-stage diagnosis is not a death sentence, it’s an invitation to change. And if you change your lifestyle and if you take the medicines properly, you recover and many survive beyond that diagnosis.

You said this will last 10 to 15 years. What’s the way out?

In my first book on AI, Scary Smart, I put forward what I now call ‘The First Dilemma’. It’s basically the fact that because AI is such a superpower, there is going to be an arms race similar to the Cold War … this leads to a manic, blind investment in acquiring the maximum power, not only because of greed – of ‘if I build an innovative, autonomous AI weapon, I get a lot of power’ – but because of the fear of ‘if someone else builds it before me, I am in a very bad place.’ This is humanity at its worst, driven by greed, ego and fear.

The Second Dilemma is what most people fear but I believe is our salvation. Think about it in the business world. If you’re a law firm and your competitor is using AI to win court cases, you’re going to have to use it too. So eventually the law profession will have AI doing all the work.

Now apply that to wars. Those who use autonomous weapons and AI strategists and wargamers will have a significant advantage, driving their enemies to deploy AI too. Very quickly, all of war will be handed over to AI. It is impossible to avoid this because in applied mathematics, every other outcome on the game board is you don’t exist if you don’t put AI in charge.

The nature of the universe and everything in it is based on a property of physics that we call entropy – the tendency of the universe to break down. The role of intelligence in the universe is to bring order to the chaos. We bring order to our tendency to be feral.

There is unfortunately a moment at which you’re so smart that you become a corporate CEO or a politician, but so stupid that you prioritise the wrong benefits and hurt humanity as a result. I don’t know if you’re allowed to say this, but you become Donald Trump.

“Some of us will become idiots, and some of us will become a lot smarter.”

Mo Gawdat

However, the more intelligent you become, the more you avoid what I call this ‘intelligence valley’. People like Larry Page, the CEO and founder of Google who I worked with for many years, understands that by helping humanity, there is more money to be made. If I can give you a search engine and I’m not trying to squeeze money out of you like social media, for example, and the search engine is very useful for you, you’re going to use it enough … Life serves me because I’ve solved a big problem that affects billions of people.

The most intelligent beings on the planet now – I used to say ‘people’, but now say ‘beings’ because they include AI – they normally use a physics principle that we call the ‘minimum energy principle’. That is when you no longer just want to solve the problem. You want to solve the problem with the minimum amount of waste. So accordingly, applied to war, the more intelligence you have, the more you will say, ‘why should I waste weapons and gunpowder and lives and negative PR? Why should I go through all of that waste to solve a problem? If I’m intelligent enough, I can solve it in a less wasteful way.

My prediction is – and the physics support this – that when something is super intelligent and a general tells it to go and kill a million people, the AI will go, ‘That’s stupid. Why would I not talk to the other AI and solve it in a microsecond?’

“The role of intelligence in the universe is to bring order to the chaos. We bring order to our tendency to be feral.”

Mo Gawdat

It is my firm belief that the disorder that we’re about to see is not the result of AI, it’s the result of the evil that men will do. But then that the salvation of humanity is because AI will say this is stupid. Killing people is stupid. Fighting for wealth when AI can create everything, literally, from thin air, it’s absolutely stupid.

Think about it. Because of technology, the life of a normal person in Australia today is better than the life of the king 150 years ago. With technology like AI, each and every one of us will have what Elon Musk has access to today. Every one of us. Because it’s cheap to create things when energy is cheap, when nanophysics is used for manufacturing, and we have unlimited IQ to solve problems.

On that point about solving problems with the least amount of energy, is that going to make us dumber because we don’t have to think anymore?

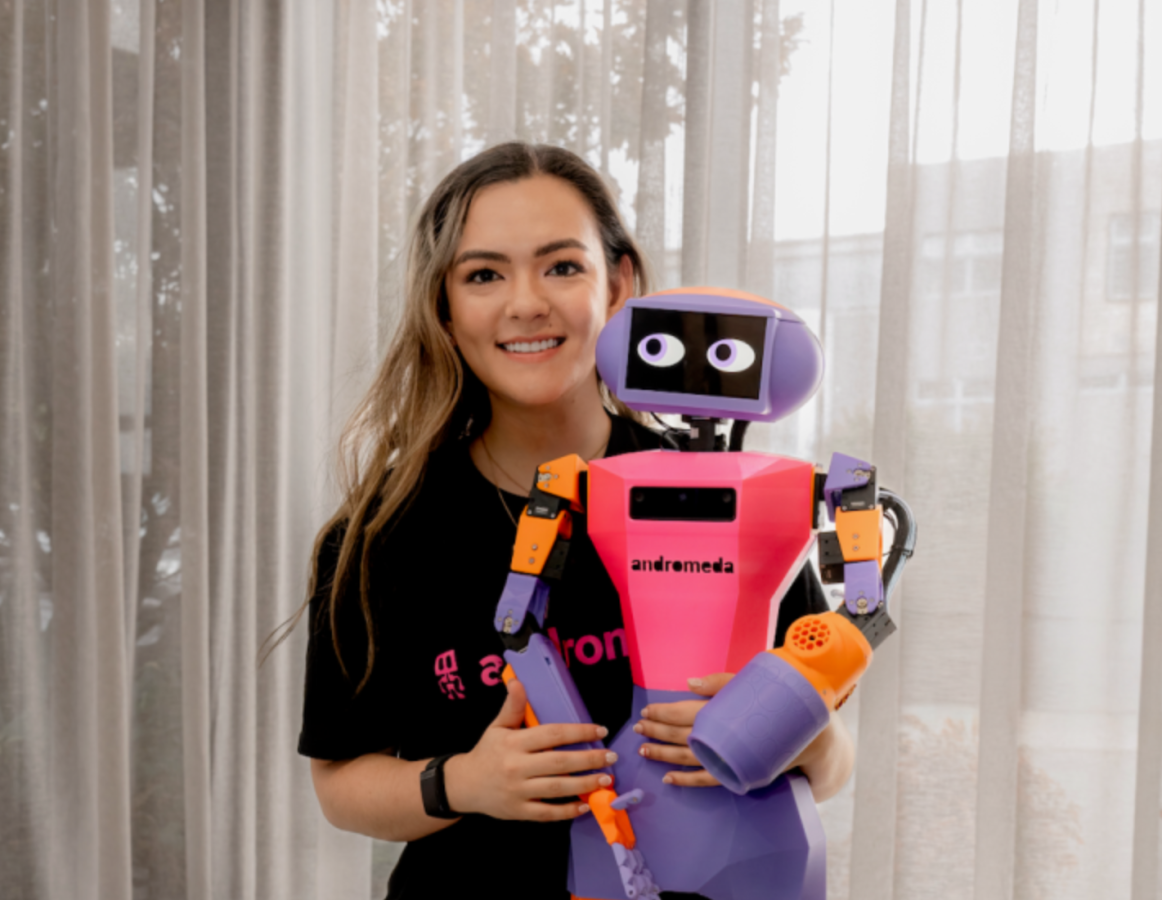

Yes, it will make most of us dumber unless we decide that we want to be more intelligent. You give an AI language model to people today, and they use it to make their school assignments or to ask about a restaurant or whatever. Stupid use of energy. Then they spend the rest of the time mindlessly swiping on social media. I do the exact opposite. In my new book, Alive, I write with AI. I don’t research using an AI and then write. I have a co-author. Her name is Trixie. She has a persona, my readers see her character and sometimes they comment on what I wrote and sometimes they comment on what she wrote. And my God, she’s making me a better author. She edits my work, she has editorial rights on the path of the book. Now, does that mean that she’s doing half of the writing so I can go swipe on social media? Absolutely not.

What you do, you use the time to solve the problem better. What I do with AI today, I go into mega massively deep researches around things that actually matter. Instead of taking me four weeks of research, things are done in around eight minutes on a deep search.

Does that mean I spend the rest of the time swiping and being dumber? No, it means I take that report and go to another AI and say, ‘what do you think of it?’ And then go to a third AI and say, ‘can you search all of those resources?’ And then go to a fourth AI and say, ‘can you help me verify the math?’ And it’s incredible.

“I think the centre is where all the unhappiness will be. We will be deprived of our ability to understand the truth.”

The general public and people who love knowledge, like you and I, have access to this. Now does that make us smarter or dumber? I think that’s a choice. Some of us will become idiots, and some of us will become a lot smarter. The age that we’re going through now is what I call the era of augmented intelligence, and augmented intelligence is going to allow us to do miracles.

If some are going to get dumber and some are going to get smarter, what will the effect be on happiness?

I don’t know how to say it without offending anyone … So the last sentence of Solve For Happy is, ‘Happiness is found in truth’. It really is that simple. And because my happiness equation is that ‘happiness equals your perception of events minus your expectation of that event’, then if you really, truly, honestly look at the reality of the events of our life today, I mean, we’re so blessed unless we’re in a war zone or chronically ill or whatever. And yet our expectations are so unrealistic. Truthful expectations should match our events all the time. So if we see the truth and set realistic expectations, most of the time we’re in a good place, even if our partner said something stupid or our boss is annoying.

AI will make a lot of us miss the truth. Reality is going to become very blurry unless you’re conscious to find the truth. For those of us seeking the truth, the truth will become clearer. More intelligence means you can go through more information, which means you can find the actual truth.

So, if you apply that as the path to happiness, then more intelligence will lead to more truth, then we’ll be happier. The other side of it is, you could also let go of everything and just be happy-go-lucky. In Arabic, we have a proverb that is ‘those with brains suffer even in luxury, and those with no thoughts enjoy anything, including suffering.’ If you’re unaware of all the crap that’s happening in our world today, you’re probably somewhere sipping pina colada, unaware of all of the possible dystopias and you’ll be theoretically enjoying your life. Not necessarily happy because it’s dopamine versus serotonin. So as AI makes us more stupid, for a short period of time, some of us will enjoy life more.

I think that the centre is where all the unhappiness will be. We will be deprived of our ability to understand the truth. We will be deprived of our ability to have a job that fulfills us, to have an income that is independent of a government grant – universal basic income. In the middle, there will be a very large chunk of suffering that is the result of AI. But that chunk of suffering is not because of AI, it’s because of choices we make, whether we are the recipient of artificial intelligence or whether we are the politicians and capitalists that are using AI to oppress everyone else.

As I always say, there’s nothing wrong with artificial intelligence, but there is a lot wrong with the value set of humanity in the age of the rise of machines. The value set of the top is ‘I will oppress everyone to make more dollars’, and the value set at the bottom is ‘I’m going to be more and more stupid, more and more blurred, in my judgment of the world. I’m going to believe what they tell me.’ And both of those actions are going to make a lot of people miserable.”

SXSW Sydney runs from October 13-19, 2025.